Render Streamed OpenAI Responses with React

November 09, 2023 | 6 minutesIn This Post

When working with artificial intelligence (AI) APIs, interfaces often stream the responses, so that you see the response printed in small chunks over time. The ChatGPT interface is a great example of this. As ChatGPT replies to you, the response is printed word by word and kinda makes you feel like you're actually "talking" to the computer.

This is different from how we usually render a text-based response from an API. Generally, we make a request to an API, wait for the entire response to be available, and then the interface renders it. Waiting for the entire response is okay if the API is reasonably quick to respond, but that's often not the case with AI. With OpenAI APIs, for example, it can take many seconds for the AI to finish responding. Waiting for the entire response can be awkward because it can leave the user waiting for long-ish periods and wondering if the application is working.

Rendering stream responses in React can be tricky depending on the approach used because you can run into problems with stale closures and lose response data between re-renders. Let's look at how we can use OpenAI to stream responses and display them in a React app one chunk at a time.

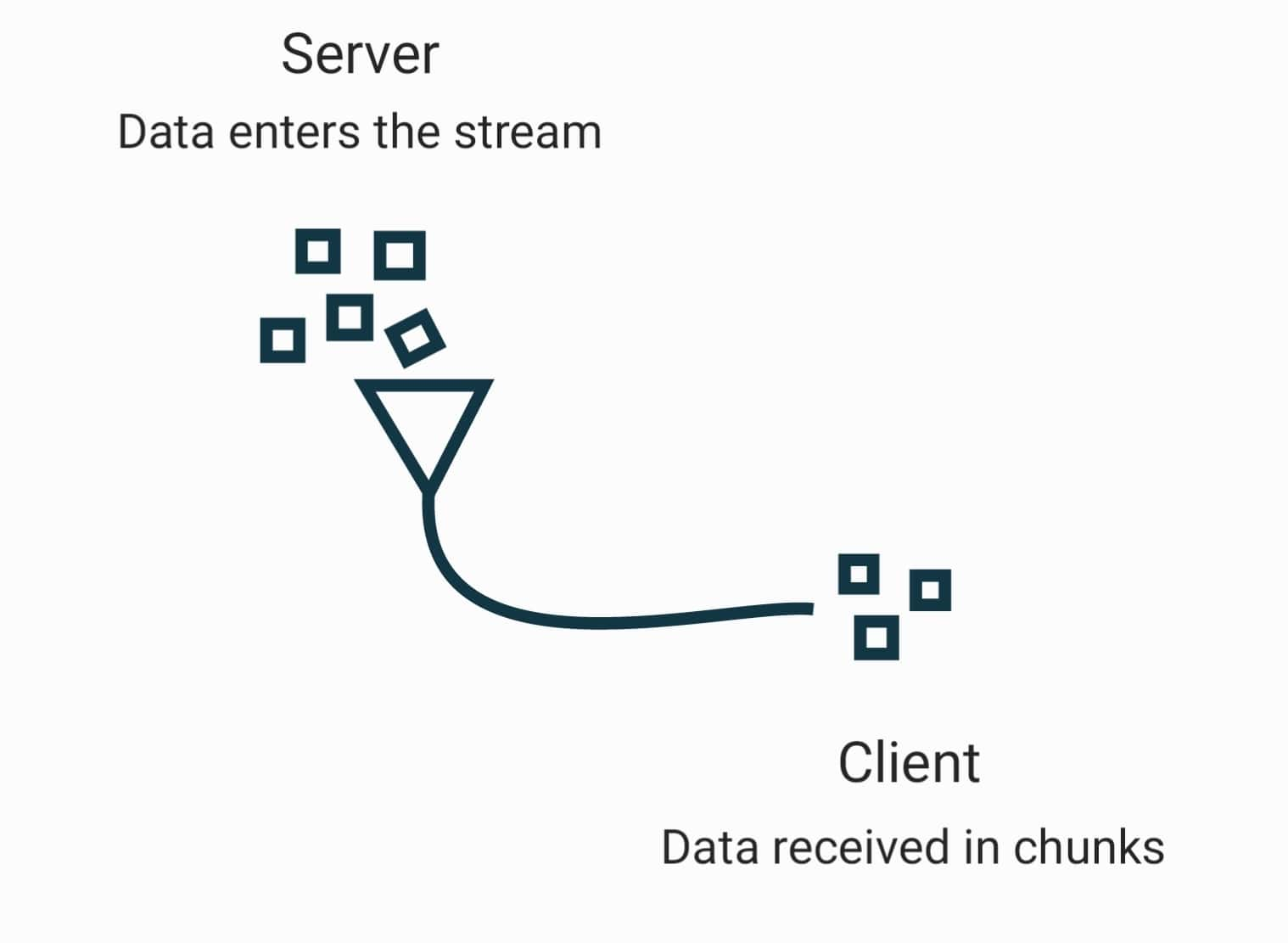

How Streams Work

If you haven't worked with streams before, you can think of them as similar to a water pipe in a house. In a house, water goes into the pipe, flows through it, and exits from the pipe a little at a time. With streamed responses, as pieces of the response become available, they are sent as a chunk within a stream, and the application can access each of those chunks as they become available.

We encounter streams every day when we watch videos online or listen to music through services like Apple Music and Spotify. These services send part of video or audio files to our devices a little at a time via a stream so that we don't have to wait to download the entire file before watching or listening.

Here's an example of a readable stream in Node.js. Open the logs in the Glitch project and reload the preview to see the output of the stream.

Streaming Responses with OpenAI

The OpenAI Chat Completions API accepts a stream parameter. By passing stream=true to the API, you can have it return the text response iteratively as a stream similar to how ChatGPT returns responses.

With the OpenAI Node package, here's an example of how to set the stream option.

1 const result = await openai.chat.completions.create({

2 messages: [

3 {

4 role: 'user',

5 content: 'How much snow will we get this year on the East Coast of the US?'

6 }

7 ],

8 model: 'gpt-4',

9 stream: true

10 });

There are a couple of ways to then access the data from the stream. You can use a for await...of loop, for example, like below:

1for await (const chunk of stream) { 2 // do something with chunk 3}

Note the use of the await keyword; streams are asynchronous, so we still need to await the chunks being passed through the stream.

When using the chat completions API, the content of each chunk can be accessed as chunk.choices[0]?.delta?.content. The chunk data structure is different if you're using features like function calling, though. Check the docs for the OpenAI Node package for more details.

Rendering Stream Responses from OpenAI with React

To build a UI that shows streaming OpenAI responses in React, the first idea that comes to mind might be to do something like this:

1export const ChatResponse = () => {

2 const [response, setResponse] = useState('');

3

4 useEffect(() => {

5 const getOpenAIResponse = async () => {

6 const stream = await openai.chat.completions.create({

7 messages: [

8 {

9 role: 'user',

10 content: 'What are the main operations supported by a stack data structure?'

11 }

12 ],

13 model: 'gpt-4',

14 stream: true

15 });

16

17 for await (const chunk of stream) {

18 // response will always be an empty string here

19 setResponse(response + chunk.choices[0]?.delta?.content);

20 }

21 };

22

23 getOpenAIResponse();

24 }, []);

25

26 return <div>{response}</div>;

27}

Updating a React state variable by appending a new value to the existing value will not work in the example above, though. Instead of building the response variable into the full response from the OpenAI stream over time, the variable will only ever contain the current chunk from the stream. This will cause the UI to show each chunk of text individually, which is not the effect we're going for.

Why does this happen? The asynchronous getOpenAIResponse function is only created once when the component mounts. When it accesses the response state variable at that point, the value is an empty string. Since we never recreate the function as response changes, the closure created by the getOpenAIResponse function becomes stale, so the response value within the function remains an empty string across re-renders, even though the state value is updated as we can see in the UI.

Use a Ref to Build the Response

A better approach for building a dynamic string for a streamed response is to use a ref. In React, refs retain their value between re-renders and since they're an object, referencing ref.current will always point to the latest value. Let's modify the example above to combine the use of a state variable with a ref that builds the response one chunk at a time, so we can display the response similarly to ChatGPT.

1export const ChatResponse = () => {

2 const [response, setResponse] = useState('');

3 const responseRef = useRef();

4

5 useEffect(() => {

6 const getOpenAIResponse = async () => {

7 const stream = await openai.chat.completions.create({

8 messages: [

9 {

10 role: 'user',

11 content: 'What are the main operations supported by a stack data structure?'

12 }

13 ],

14 model: 'gpt-4',

15 stream: true

16 });

17

18 for await (const chunk of stream) {

19 /** The ref stores a dynamic string containing each chunk of the response received so far and is stable across re-renders. */

20 responseRef.current = responseRef.current + chunk.choices[0]?.delta?.content;

21

22

23 // Update the state variable based on the ref content so that the UI re-renders

24 setResponse(responseRef.current);

25 }

26 };

27

28 getOpenAIResponse();

29 }, []);

30

31 return <div>{response}</div>;

32}

By using a ref to build the response string as we read the stream, the UI prints each part of the response without losing the previous parts.